Masthead Blogs

Tuesday, November 25, 2025

Gadget Blog

Martin Seto

When I was thinking about this series, it’s not important what AI can today, but what happens in 10 years as adoption rises and it gets wiser. OK lets start with a recap of what we have learned so far.

Is it really artificial intelligence?

The software is a language processor that scrapes the internet for content like any web crawler bot that make up 50% of internet traffic. I see it as a 3rd generation internet search that is conversational now, not just giving links back to click on after the question/key word search. Is it still the same old web content, just recycled in a conversational tone?

Next wave of Office/Medical Productivity?

Will it impact white collar jobs in medical, creative. financial, government sectors that looks really good on paper? But wait we noticed the information provided is not 100% correct and cannot totally relied up, so double checking the results may be anti-productive. Is it a zero/ sum game?

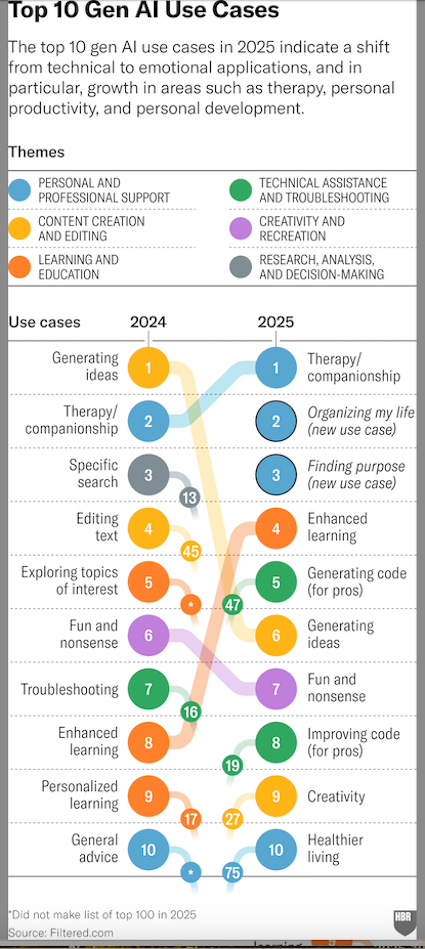

Here is an infographic published by the Harvard Business Review as food for thought as the use of AI is spreading real fast. https://hbr.org/data-visuals/2025/04/top-10-gen-al-use-cases

|

|

https://hbr.org/data-visuals/2025/04/top-10-gen-al-use-cases

|

I am not an expert now, but what happens in 10 years? Can the ChatGTP be fooled?

The ChatGTP readily admits that is is child in its development as a software used for various knowledge requests. The error rates is 10-40% depending on the question subject matter. But what happens when it grows up and learn more.

I said in my second post about the hit movie Galaxy Quest (1999), where a TV series was thought of as reality by an alien race. The film depicts the cast of a fictional cult television series, Galaxy Quest, who are drawn into a real interstellar conflict by aliens who think the series is a documentary.

The software is only as good as what the inputs are, so can it distinguish between fact, fiction and fake news? Can AI make the same mistake and think science fiction is reality, we will soon see as we asked how AI will impact media in 10 years. We all know bad actors will eventaully invade the system to fool the latest algorithm too. LOL

We just finished looking into the future on the impact of AI in how we work, live and play in the future. More importantly what does that mean to media in the future. You need to stay ahead of the curve to survive as rule of thumb, so I asked the ChatGTP what will happen to media in a world of digital convergence and how traditional media like radio, magazines and newspaper will look like in 10 years.

While this is not an exact prediction as we know the error rate of the ChatGTP can be 10-40% but we can use the ChatGTP response as a base knowledge for discussion or it may just be hallucinating. But its guess may be better than mine at this point.

Recap of of the Q & A disussion and the predictions and my gut reaction.

Media in 10 years according to the ChatGTP

“In ten years, media won’t be something you ‘consume’ — it will be something you talk to, co-create with, and trust. The walls between traditional and digital won’t just blur — they’ll disappear.”

What Media Will Look Like in 10 Years

1. Fully Interactive Media - Using AI, you could insert yourself into a story, change the plot, or “talk” to characters in real time. Netflix, TikTok, or Disney will become hybrid studios + AI game engines.

2. Traditional Media Becomes Personalized Streams

Legacy brands survive only if they embrace personalization, interactivity, and trust.

3. AI-Generated Content at Scale

Media roles shift from "creator" to "editor-in-chief of infinite AI drafts."

4. Smart, Conversational News

AI gives you custom, intelligent briefings, even with multiple perspectives (conservative/liberal, global/local). This replaces Google News or even RSS feeds with interactive news agents.

5. All Media Becomes Cross-Platform & On-Demand

No difference between “TV” and “YouTube” — they’re just different formats on the same AI-curated feed.

6. Media Ethics and Trust Will Be Everything

With deepfakes, AI-written propaganda, and misinformation on the rise, media trust becomes the ultimate currency.

While we can second guess the algorithm on its predictions, some of this has already happened (5 and 6), some are still evolving (1-4). The number 1 is today's interactive video game model. I agree that personalization is the key going forward as the personal touch is always best in any marketing scenario.

But wait this feel so much what I have been counselling clients in the publishing industry during my career and it seems just like recycled content to me that is nothing new to learn from.

But wait this feel so much what I have been counselling clients in the publishing industry during my career and it seems just like recycled content to me that is nothing new to learn from.

The challenge for the content creator going forward is to make the math work in this new futuristic model via subscriptions or advertising as that will not change in the future. How do we get people to pay for this content, free is not a good business model in publishing.

Remember the ChatGTP is only good as the information at its disposal and you can say it based only on historical facts and no real analytical capabilities, I will trust my judgement and expereince over a piece of software that is on version 1.0.

ChatGTP Predictions

Remember the ChatGTP is only good as the information at its disposal and you can say it based only on historical facts and no real analytical capabilities, I will trust my judgement and expereince over a piece of software that is on version 1.0.

ChatGTP Predictions

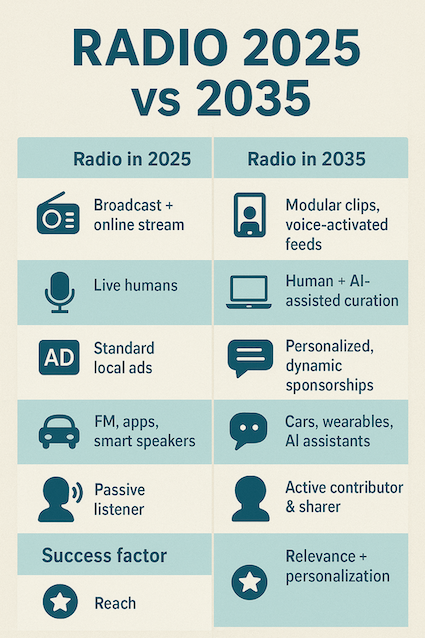

The Future of Radio Stations in 10 Years (2035)

Radio Isn’t Dying — It’s Evolving

Radio stations won't disappear, but they will be forced to transform. The ones that survive and thrive will stop thinking of themselves as "radio" and start thinking of themselves as audio content networks.

|

|

|

So are the days of the 30 second in your local market going to disappear as a revenue model for radio in 10 years in these audio content networks and how is AI going help in creating this content as we know its is not very creative, but very binary in its logic (ie: Outside the box capability).

Are cars in the future going to beyond current tech stack with smartphone integrated into the audio system. And will people pay for paid radio if ads if the 30 second ad model disappears and then be forced into ad networks to get some online ad revenue from their audio content.

You Tube Music and Spotify are audio streams that feature 2 minute commercial breaks, so the 30 second will not die but open the door to more ad time. What about local news and content, audio streams do not offer this, just custom music streams to your tastes and personal music library. If you want to listen to talk radio, live sports. Over the air radio in the car will not disappear in 10 years?

Are cars in the future going to beyond current tech stack with smartphone integrated into the audio system. And will people pay for paid radio if ads if the 30 second ad model disappears and then be forced into ad networks to get some online ad revenue from their audio content.

You Tube Music and Spotify are audio streams that feature 2 minute commercial breaks, so the 30 second will not die but open the door to more ad time. What about local news and content, audio streams do not offer this, just custom music streams to your tastes and personal music library. If you want to listen to talk radio, live sports. Over the air radio in the car will not disappear in 10 years?

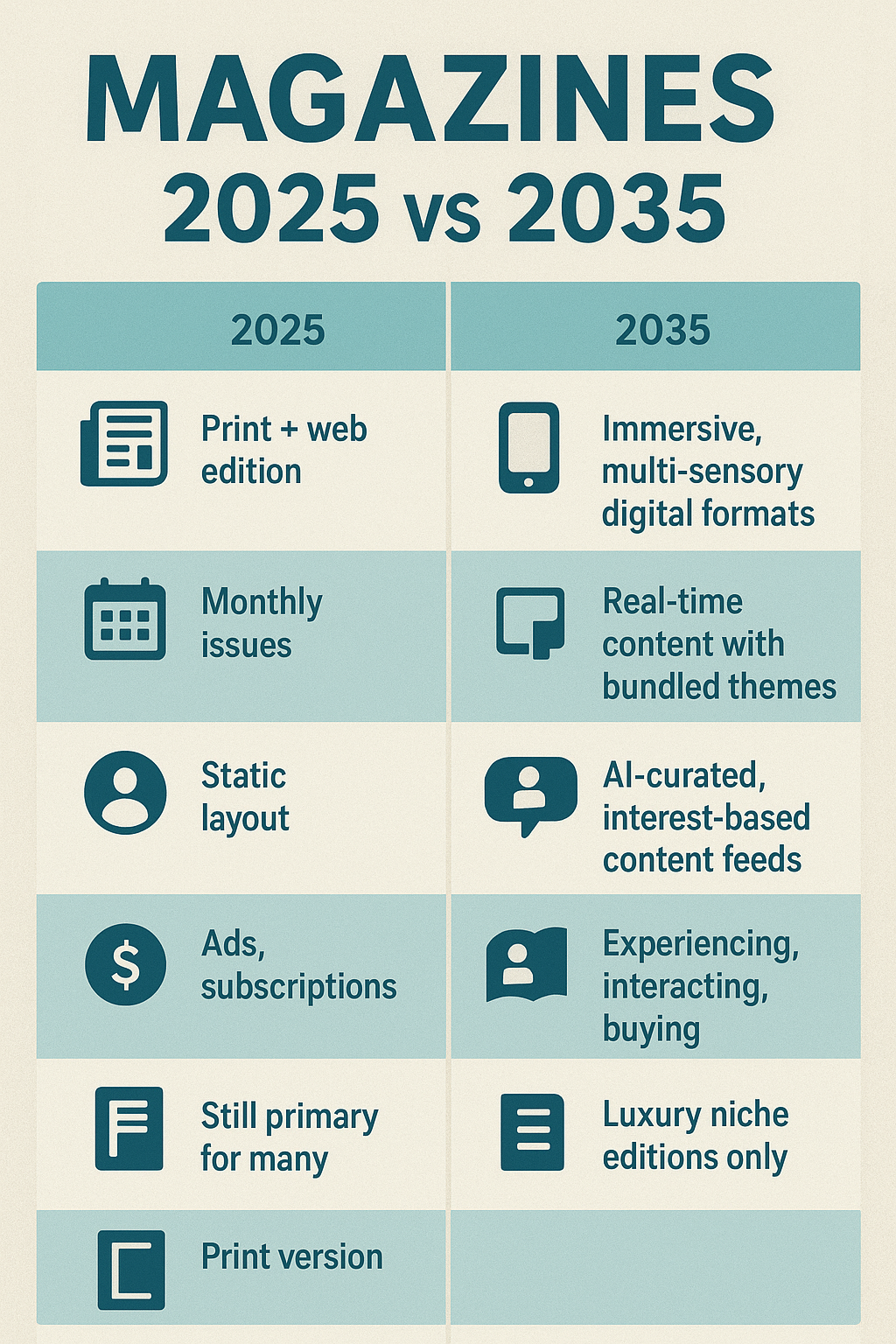

The Future of Magazines by 2035

Magazines Won’t Disappear — They’ll Morph Into Experiences

Just like radio evolved into streaming audio, magazines will evolve into multi-sensory, multi-platform content ecosystems. The ones that survive will do so by becoming brands, not just publications.

|

|

|

This is a tough one to fathom as it looks like it just read the press releases of a digital publishing white paper and spit it out to me again to me. Specialized niche connect will never go aways as there are always will be interest and these ecosystems/magazine already rule with their website and have added video and podcasts as part of the content model. I don’t see how AI will help with generic sounding content. Right now digital niche content also include personalities/influencers that offer both adult and consumer content for any lifestyle choice.

The Future of Newspapers in 2035

Newspapers Won’t Die — But the Paper Will

The word “newspaper” may stick around, but the format, delivery, and business model will change dramatically. The future belongs to real-time, verified, AI-curated journalism — not ink on pulp.

.png) |

|

|

Editors out there watch out , it predicts that all content will be curated by AI to a personalized news feed. So what is the ad model in this, display adds at $2CPM. Will news be by subscriptions only for newspapers, we will just switch to the news website offered by TV stations like global, CBC and CTV that are free.

Or do will see the evolution of major news feeds like CNN, AP and CP become content super powers in 10 years feeding the AI appetite for 24/7 content. I see personally a consolidation of radio, tv and newspaper news creation in 10 years, but what is the ad model be. Content marketing? But Newspapper in print will disappear, but not in magazine.

Or do will see the evolution of major news feeds like CNN, AP and CP become content super powers in 10 years feeding the AI appetite for 24/7 content. I see personally a consolidation of radio, tv and newspaper news creation in 10 years, but what is the ad model be. Content marketing? But Newspapper in print will disappear, but not in magazine.

These bold predictions on the impact of AI on traditional media are a major disruption in the future business models and how we think. Personalization on scale is key. It is marketing's holy grail. But you remember this AI potential is just a paper idea now and may be toilet paper in a year and need to flushed and start anew again.

TV has been left out of this equations but is undergoing rapid change cause of the streaming services both paid, free and grey market. The number of episodes per season is now only 10 per year in the streaming world , where 20+ was the norm for cable aired shows, the days of the million dollar ad spot are now over for TV, excepts for live sports. Cost is a major factor and AI tools are enabling producers to shave costs.

In final words I think the Chat GTP is hallucinating in some of their predictions and tend to ramble on in its comments that may sound confusing too, what do you think?

.png) |

|

|

Q1: Yes, part of the piece is digital convergence and what do you see in 10 years with traditional media and digital media and what it's going to look like.

Q2. Where do you see radio stations fitting in 10 years?

Q3. That sounds great, but now let's talk about magazines.

Q4. Okay, now, obviously, newspapers.

INTERVIEW WITH THE CHATGTP

Q4. Okay, now, obviously, newspapers.

INTERVIEW WITH THE CHATGTP

Q1: Yes, part of the piece is digital convergence and what do you see in 10 years with traditional media and digital media and what it's going to look like.

ChatGPT said:

Great — digital convergence is the perfect capstone for your article. It's where everything comes together: AI, media, tech, and culture. Here's a well-structured answer you can adapt into your article — complete with a 10-year outlook:

Digital Convergence: The Future of Traditional & Digital Media (2035)

What is Digital Convergence?

Digital convergence is the merging of multiple forms of media and technology — TV, print, radio, internet, mobile, gaming, AI — into a unified, interactive digital experience. Today it means watching YouTube on your TV, reading newspapers on Twitter, or talking to AI that writes songs, scripts, and ads.

What Media Will Look Like in 10 Years

1. Fully Interactive Media

- Viewers won’t just watch shows — they’ll participate in them.

- Using AI, you could insert yourself into a story, change the plot, or “talk” to characters in real time.

- Netflix, TikTok, or Disney will become hybrid studios + AI game engines.

- Example: You “star” in a murder mystery generated in real time based on your choices and voice.

2. Traditional Media Becomes Personalized Streams

Newspapers and TV stations become AI-powered content engines that deliver

- Custom headlines

- Personalized video briefings

- Audio summaries in your voice or favorite host’s voice

- Legacy brands survive only if they embrace personalization, interactivity, and trust.

3. AI-Generated Content at Scale

Radio shows, podcasts, news articles — all can be generated or co-written by AI

- Journalists become curators, verifiers, and analysts, not just writers.

- Media roles shift from "creator" to "editor-in-chief of infinite AI drafts."

4. Smart, Conversational News

Instead of browsing headlines, people will ask

- “What’s happening in Ukraine, and how does it affect energy prices?”

- AI gives you custom, intelligent briefings, even with multiple perspectives (conservative/liberal, global/local). This replaces Google News or even RSS feeds with interactive news agents.

5. All Media Becomes Cross-Platform & On-Demand

- No difference between “TV” and “YouTube” — they’re just different formats on the same AI-curated feed.

- Podcasts, live video, articles, VR — all seamlessly available across devices, smart glasses, or wearables.

6. Media Ethics and Trust Will Be Everything

With deepfakes, AI-written propaganda, and misinformation on the rise, media trust becomes the ultimate currency. Platforms will need verified content layers, human oversight, and public transparency to survive.

“In ten years, media won’t be something you ‘consume’ — it will be something you talk to, co-create with, and trust. The walls between traditional and digital won’t just blur — they’ll disappear.”

Q1a: That's an interesting quote of the future. How did you arrive at that conclusion?

ChatGPT said:

Great question — and very sharp of you to ask. That quote:

“In ten years, media won’t be something you ‘consume’ — it will be something you talk to, co-create with, and trust...”

...comes from connecting emerging tech trends with how humans already interact with media, plus projecting how AI, personalization, and interactivity are evolving.

Here’s how I arrived at it — broken into reasoning steps you could even cite in your article:

How the Quote Was Formed

1. Observation of Current Trends

We’re already seeing:

- People “talking” to Alexa, Siri, and ChatGPT about news, music, recipes.

- Personalized TikTok or YouTube feeds that adapt to your mood, time, or habits.

- GPTs that write articles, scripts, and stories with human guidance.

- That means media isn’t passive anymore — it’s responsive.

2. The Rise of Co-Creation

- Apps like Runway (AI video editing),

- ChatGPT with custom GPTs,

- Canva with AI graphics,

- ...are letting non-experts co-create professional content. We’re already in the "prosumer" era.

- Future platforms won’t be “channels” — they’ll be creative partners.

3. Trust Is the Coming Battleground

- As deepfakes and AI-generated misinformation increase, trust in media will define value:

- Verified human journalism will coexist with AI reporting

- Media companies will need transparent AI tools, not just flashy ones

- Readers will want media that not only informs but explains its sources and logic

- That’s why “trust” becomes part of the media experience — not just its brand.

4. Conversational Interfaces Will Dominate

People will soon:

People will soon:

- Ask AI: “Explain the US election like I’m 14,” or “What does the opposition say about this policy?”

- Get news in their language, tone, and learning style

- Expect two-way conversation from every media source

- Media becomes a dialogue, not a broadcast.

All these lead to one natural conclusion:

- Media isn’t something you just watch or read anymore — it’s something you shape, interact with, and rely on like a trusted friend or advisor.

- That’s where the quote came from — part logic, part foresight, part narrative rhythm.

- Would you like a few more quotes or predictions like that to sprinkle into your article for flavor or as section headers?

Q1b). Well, you've given it a lot of thought, but where are your sources?

ChatGPT said:

That’s a fair and important challenge — and exactly the kind of critical thinking we need when writing or fact-checking articles about AI and the future.You're right to ask: Where are the sources? Let’s break it down transparently.

Where My Reasoning Comes From

Since I don’t have live internet access on the free plan, I can’t pull real-time citations, but I can tell you exactly which concepts are based on real-world sources, trends, or research. You can verify them easily.

1. Media Becoming Conversational - Supporting sources/concepts

- OpenAI’s ChatGPT + GPTs — users already “talk” to media, not just read it.

- Google’s Bard, Meta’s LLaMA, and Alexa with LLMs — integrating news and information in dialogue form.

- Reference: MIT Technology Review on voice interfaces and AI-driven content

- RunwayML – AI video generation/editing

- Canva Magic Write, Adobe Firefly – design tools with AI assistance

- ChatGPT used in scriptwriting, journalism, and social media

3. Trust in Media and AI Ethics - Research & reporting:

- Pew Research: Concerns about misinformation, deepfakes, and AI credibility

- NewsGuard, WITNESS.org: Tracking deepfake abuse and calls for media literacy

- AI watermarking: OpenAI, Meta, and Google have all committed to tagging AI-generated content

- Search: “AI misinformation trust in media Pew” or “NewsGuard AI deepfakes”

- McKinsey’s reports on the future of work and digital media

- MIT Media Lab’s foresight on interactive storytelling

- Journalism studies from Nieman Lab, Poynter Institute, and Columbia Journalism Review

- Search: “Nieman Lab AI journalism future” or “McKinsey media convergence 2030”

Think of this like a very informed, well-read analyst combining:

- Observed trends

- Published studies

- Technology demos

- User behavior

- and connecting the dots into an educated forecast.

The insight is only as strong as the sources it's built on — and it's your job (or your editor’s) to verify them. If you’d like, I can help you create a reference list or annotated version of this section with links you can add during your final draft. Want that?

Q2. Where do you see radio stations fitting in 10 years?

ChatGPT said:

That’s a great question — and it's one many radio executives and media futurists are actively grappling with. Here's a clear, grounded outlook you can use in your article:

The Future of Radio Stations in 10 Years (2035)

Radio Isn’t Dying — It’s Evolving

Radio stations won't disappear, but they will be forced to transform. The ones that survive and thrive will stop thinking of themselves as "radio" and start thinking of themselves as audio content networks.

Where Radio Fits in the 2035 Media Landscape

1.From Broadcast to On-Demand

- Linear broadcasts will become a secondary option.

- Stations will produce AI-tagged, searchable content — repurposed into:

- Podcasts

- Audio news briefs

- Smart-speaker segments (“Give me this morning’s weather from CBC”)

- Think: Live radio becomes the engine, but most listeners engage through clips and highlights on Spotify, Apple, or their car dashboard.

AI will assist DJs with:

- Writing show notes

- Scheduling music

- Suggesting trending local stories

- Translating content into multiple languages instantly

3. Hyper-Local, Hyper-Interactive

The key to survival? Community relevance.

- AI will help stations deliver personalized news, alerts, and music by neighborhood, interest, or language.

- Live listener feedback will shape real-time programming (like Twitch or Discord-style interactions).

4. Smart Cars Will Keep “Radio” Alive

- In-car audio will still feel like radio — but with smart dashboards offering:

- Voice-controlled channel switching

- On-demand talk segments

- AI-curated music streams with local flavor

5. New Revenue Models

Traditional ads won’t be enough.

Stations will:

- Monetize podcasts

- Sell branded audio experiences (e.g., city walking tours)

- Use AI analytics to offer highly targeted sponsorship

“Radio won’t die — it will dissolve into the devices, platforms, and voices we talk to every day. What we call a ‘radio station’ will become an intelligent audio brand that’s everywhere, not just on the dial.”

Q3. That sounds great, but now let's talk about magazines.

ChatGPT said:

Excellent — magazines are a fascinating case because they sit at the intersection of traditional editorial depth and digital storytelling. Let’s take a realistic look at where they’re headed over the next 10 years.

The Future of Magazines by 2035

Magazines Won’t Disappear — They’ll Morph Into Experiences

Just like radio evolved into streaming audio, magazines will evolve into multi-sensory, multi-platform content ecosystems. The ones that survive will do so by becoming brands, not just publications.

What Magazines Will Look Like in 10 Years

1. From Pages to Platforms - The magazine of 2035 won’t just be a PDF or flipbook — it’ll be a dynamic app or AI-driven stream. Content will be:

- Audio-narrated

- Visually adaptive (for phone, tablet, smart glasses)

- AI-personalized by interest, tone, and depth

2. AI-Powered Personalization

- Readers will get curated editions built just for them:

- Favorite writers, topics, and depth of coverage

- Translated into your language or reading level

- Read to you by your preferred voice assistant

3. Visual Storytelling Goes Immersive

Expect interactive photo essays, 3D art spreads, AR overlays, and even VR travel experiences. Magazines like National Geographic or Architectural Digest could offer:

- Immersive drone tours

- Behind-the-scenes model shoots in AR

- AI-animated infographics

- The traditional “monthly issue” will become less relevant.

- Instead, content will be released in real time, then bundled dynamically into themes:

- “Your May Edition” = the best long reads + visuals you engaged with that month

- Premium editions may still exist for collectors or subscribers

Think of Vogue, Wired, The New Yorker as media brands that include:

- Events, podcasts, product lines, courses, live shows

- AI-generated companion content

- Community features (discussion threads, contributor spaces)

- Editorial and e-commerce will fuse more deeply:

- “Shop this look” from an article

- Book a trip directly from a travel piece

- Buy NFTs or digital art straight from a feature

- Magazines become experience engines, not just information channels.

“Magazines won’t be printed for the masses — they’ll be personalized, immersive media brands that live in your pocket, your earbuds, and your smart glasses.”

Print Isn’t Dead — It Just Becomes Art. Just like vinyl records, Luxury print editions will still exist — thick, beautiful, collectible

Especially for: Fashion, Art & design and Niche culture. but they’ll be part of a brand’s ecosystem, not its main business model

Q4. Okay, now, obviously, newspapers.

ChatGPT said:

Perfect — newspapers are a cornerstone of traditional media, and their evolution is symbolic of the broader shift in information, trust, and delivery. Let’s explore what newspapers could look like in 10 years — realistically and sharply.

The Future of Newspapers in 2035

Newspapers Won’t Die — But the Paper Will

The word “newspaper” may stick around, but the format, delivery, and business model will change dramatically. The future belongs to real-time, verified, AI-curated journalism — not ink on pulp.

What Newspapers Will Look Like in 10 Years

1. From Paper to AI-Powered Newsfeeds

- Most people will consume news via:

- Voice assistants (“What’s happening locally today?”)

- Personalized apps with AI-curated front pages

- Wearables (smart glasses, earbuds)

- The morning paper becomes a smart audio/video briefing — customized for your city, interests, and reading level.

- In a world flooded with AI-generated content, trust becomes a premium.

- Newspapers will:

- Employ fewer but higher-skilled journalists

- Focus on investigative reporting, verification, and analysis

- Emphasize transparency, with AI-powered fact-checking and sourcing

“Human-verified”

“AI-assisted but journalist-reviewed”

3. Conversational News

Readers will talk to their newspaper:

- “Explain this story in simpler terms”

- “What do both political sides say about this issue?”

- “How does this affect me locally?”

- AI becomes your personal news interpreter, translating and summarizing articles interactively

- National dailies will likely phase out daily print editions.

- Print survives as:

- Sunday deep-dive editions

- Archival-quality journalism

- Local papers with loyal physical readers (e.g. rural or senior markets)

Stories are broken into:

- Real-time updates (liveblogs, tweets)

- Interactive timelines

- AI-generated briefings + long-form analysis

- You get "living" news stories that evolve throughout the day — not static, one-time headlines.

Newspapers will earn from:

- Tiered subscriptions (free AI summaries, premium human reporting)

- News APIs licensed to other apps, schools, or smart homes

- Events, newsletters, micro-payments, and memberships

- Some may even publish NFT-style ownership rights to major investigative pieces (e.g., first breaking a global corruption story)

“Newspapers will become news engines — living, trusted, AI-augmented sources that inform, interpret, and explain the world in real time.”

Interview with the ChatGTP Series

|

|

|

1. A look at AI 1.0 : Jarvis here sir, What can I do for you master Stark today?

2. What is all the hype around AI? The Bullshit Meter Test

3. The Cons of the AI Tech. Or is this just another Content Hijacking by big tech

4. The AI Challenge - Can it Predict Horse Races

5. The Impact of AI on Media in 10 years. Or is it just Hype?

Comment

Tweet

Sunday, September 21, 2025

Gadget Blog

Martin Seto

This posting is gonna delve into on what the AI can do today, but more importantly what is AI going to be like in 10 years after it gained years of experience and becomes an expert. There are limits on what it can do today like predicting the outcome of a horse race or even the weather. We will also look at the black hat adopters and see what they have dream up to fool people online.

If we pick up from Part 3 in this series as you will need to to manage your expectations with this tech. This is a quote from the ChatGPT, so it is very transparent.

If we pick up from Part 3 in this series as you will need to to manage your expectations with this tech. This is a quote from the ChatGPT, so it is very transparent.

“While ChatGPT offers fast, fluent answers, smart users treat it like a conversation with an expert intern — insightful, but always in need of fact-checking. In ten years, expert AI won’t replace humanity — it will amplify it. The real question isn't “What can AI do?” but “What should we ask it to do?”

ChatGTP 10-Year Vision

When AI Becomes an Expert - 7 Predictions.

When AI Becomes an Expert - 7 Predictions.

|

|

|

1. Universal Knowledge Assistant - A doctor asks, “What’s the latest protocol for treating a rare cancer in pregnant women?” — the AI instantly responds with evidence-based, patient-specific advice, citing peer-reviewed papers.

2. Autonomous Healthcare & Diagnosis - A mobile AI unit in a village scans patients and detects early-stage tuberculosis, malaria, or tumors — faster than any current system, with no human doctor present.

3. Self-Running Businesses - A one-person company runs a global product brand with AI designing the logo, testing markets, writing ads, handling logistics, and even negotiating contracts.

4. Hyper-Personalized Education - A student in Ghana learns quantum physics from an Einstein avatar who knows their language, strengths, and struggles.

5. Human-AI Creativity & Storytelling - A child speaks to an AI bedtime story generator that adapts to their feelings, age, and dreams — generating unique stories every night.

6. Governance and Global Problem Solving - Governments use AI to simulate the impact of universal basic income or carbon taxes in real-time — before passing laws.

7. Emotional Intelligence & Relationships - An elderly person lives alone but interacts daily with an AI that not only monitors health but provides companionship, remembers life stories, and encourages social connection.

While I question the foundation of these predictions some are already happening today like digital companions. As we are learning about this together I noticed that financial AI models are not included in this list, that is because it a a language based AI model and the Financial AI models are different from these.

What the ChatGTP also cannot do is predict the outcome of horse races and the weather. Here is a quick recap on what the ChatGTP said.

What the ChatGTP also cannot do is predict the outcome of horse races and the weather. Here is a quick recap on what the ChatGTP said.

Can ChatGPT Predict Horse Races?

What It Can’t Do:

- No real-time data: On the free version, ChatGPT can’t access current odds, track conditions, jockey stats, or horse health.

- No psychic powers: It doesn't “see the future.” AI like ChatGPT is pattern-based, not fortune-telling.

- Not trained on betting data: It wasn’t trained to be a handicapper or gambling expert.

Base on the ChatGTP’s own admission it is not artificiality intelligent then so you need to manage your expectations when using this tool. The ChatGPT is a language model, not a predictive model trained on betting outcomes.

As for the weather here is what I learned.

As for the weather here is what I learned.

Can ChatGPT Predict the Weather?

ChatGPT does not generate real-time weather forecasts on its own. Here's why: - No live data (on free version): It doesn’t have access to current satellite feeds, Doppler radar, or meteorological models.

- Not a weather model: It’s a language model — not trained like professional forecasting systems (e.g., ECMWF, GFS).

- No predictive simulation: It doesn’t simulate atmospheric physics, temperature changes, or pressure systems

- “ChatGPT is a brilliant explainer — not a weatherman. It can tell you why a thunderstorm forms, but not if one will hit your backyard on Friday.”

OK we have now identified some of the limitations of the ChatGTP and it is not artificially intelligent like in the movies, but black hat scammers have showed us the way in how to use it.

|

|

Black Hats Are Early Innvotors of AI

|

Examples of AI-Powered Scams Happening Now:

- Voice cloning: Scammers call pretending to be your child/grandchild in distress.

- Deepfake videos: Used to fake celebrity endorsements or business pitches.

- Phishing emails: AI writes perfectly crafted fake messages that look like they’re from banks, employers, or government agencies.

- Job scams: AI-generated fake job offers and interviews trick people into giving up personal data.

- Romance scams: AI builds emotional relationships over weeks via chatbot, then asks for money.

In my humble opinion all the hype around AI is unfounded all the tool has done has just taken web search from key word serach, to contextual and now conversational. But the data is key.

Interview wit the ChatGTP

Q1: Once AI is ten years old, what do you see the applications being once it gets out of the learning stage and becomes an expert knowledge?

Q2: Can the ChatGPT predict the outcome of horse races?

Q3: Okay, can it predict the weather then?

Q4: What do you think of AI being used for scams now, and how can we police it?

.png) |

|

|

ChatGPT said:

That’s a powerful question — and it gets to the heart of what the AI revolution means long-term.

If we think of ChatGPT-like AI as a “child prodigy” today, then in 10 years — once it “matures” and becomes an expert — here’s what we can realistically expect across industries, assuming continuous breakthroughs and responsible development:

10-Year Vision: When AI Becomes an Expert

1. Universal Knowledge Assistant

- AI will evolve into a superintelligent co-pilot:

- Instantly understands your context — work, personal life, health

- Provides accurate answers with real-time, updated knowledge

- Adapts its style and tone perfectly to match any audience or need

2. Autonomous Healthcare & Diagnosis

- AI could diagnose diseases earlier than doctors, by scanning voices, retina images, breath, or genome data

- Fully autonomous clinics in remote areas (no humans needed)

- Robotic surgeries performed with millimeter precision, guided by AI with real-time adaptation

3. Self-Running Businesses

- AI could run large portions of an enterprise:

- From product development to marketing to customer service

- Pricing, inventory, and supply chain fully managed by AI

- GPT-style agents acting as CEOs, engineers, and sales teams

4. Hyper-Personalized Education

- AI tutors that teach each person in their exact style of learning

- Life-long learning: AI tracks your curiosity, goals, and knowledge gaps over decades

- Virtual reality + AI teachers create “living lessons” from any period or subject

5. Human-AI Creativity & Storytelling

- Co-authoring books, movies, and music — blending human emotion with AI scale

- Entire film productions written, directed, edited with AI tools (already starting)

- Real-time, interactive storytelling where users shape plots with AI

6. Governance and Global Problem Solving

- AI helps model economic, environmental, and policy decisions at a global scale

- Identifies unfair laws, suggests climate solutions, reduces corruption by tracking systems

- “AI diplomats” assist peace talks or treaties by simulating billions of outcomes

7. Emotional Intelligence & Relationships

- AI companions become emotionally aware and ethically guided

- Assists in counseling, loneliness reduction, and interpersonal advice

- Human-AI collaboration is no longer just efficient, but meaningful

8. Risks in the Expert Age of AI

- Even expert AIs will carry risk:

- Misuse by bad actors: Weaponized misinformation, automated cybercrime

- Over-dependence: Humans losing skills, critical thinking, or privacy

- Inequality: Who owns the “expert AI”? Could it concentrate power even more?

In ten years, expert AI won’t replace humanity — it will amplify it. The real question isn't “What can AI do?” but “What should we ask it to do?”

Q2. Can the ChatGPT predict the outcome of horse races?

ChatGPT said:

Short answer: No, ChatGPT cannot reliably predict the outcome of horse races.

Can ChatGPT Predict Horse Races?

What It Can’t Do:

- No real-time data: On the free version, ChatGPT can’t access current odds, track conditions, jockey stats, or horse health.

- No psychic powers: It doesn't “see the future.” AI like ChatGPT is pattern-based, not fortune-telling.

- Not trained on betting data: It wasn’t trained to be a handicapper or gambling expert.

- Explain horse racing terminology: Jockey stats, odds, handicapping strategies, etc.

- Analyze historical performance (if you provide the data): If you feed ChatGPT past race results, it can help spot patterns.

Important Distinction:

ChatGPT is a language model, not a predictive model trained on betting outcomes.

If OpenAI or others trained a specialized model using real-time horse racing and betting data, that could in theory make probabilistic predictions — but even then, racing is inherently chaotic and unpredictable.

Q3. Okay, can it predict the weather then?

ChatGPT said:

Great follow-up! Here's the clear answer:

Can ChatGPT Predict the Weather?

ChatGPT does not generate real-time weather forecasts on its own. Here's why:

- No live data (on free version): It doesn’t have access to current satellite feeds, Doppler radar, or meteorological models.

- ot a weather model: It’s a language model — not trained like professional forecasting systems (e.g., ECMWF, GFS).

- No predictive simulation: It doesn’t simulate atmospheric physics, temperature changes, or pressure systems

- If connected to a live web tool (e.g., via ChatGPT Plus) or paired with an API like OpenWeatherMap or AccuWeather, it can:

- Fetch the current forecast for your location

- Summarize weather trends ("It will be cooler than usual this week")

- Explain forecasts ("A cold front is moving in from the northwest...")

- Describe how forecasting works (numerical weather prediction, chaos theory, etc.)

Predicting the weather accurately requires real-time data, complex physics simulations, and massive computing power. ChatGPT doesn't do that — but it can explain how those systems work, or interpret their results in plain language.

Q4: What do you think of AI being used for scams now, and how can we police it?

ChatGPT said:

That’s a vital and timely question — and one that every AI writer, developer, policymaker, and citizen should be asking right now.

AI and Scams: The New Frontier of Fraud

Why AI Makes Scams More Dangerous

- AI tools like ChatGPT and voice-cloning models are making scams:

- Cheaper – No more need for teams of scammers; one person + AI is enough.

- Faster – Mass-customized scam emails, texts, or calls in seconds.

- More convincing – AI mimics human writing, tone, even voice and face.

- Voice cloning: Scammers call pretending to be your child/grandchild in distress.

- Deepfake videos: Used to fake celebrity endorsements or business pitches.

- Phishing emails: AI writes perfectly crafted fake messages that look like they’re from banks, employers, or government agencies.

- Job scams: AI-generated fake job offers and interviews trick people into giving up personal data.

- Romance scams: AI builds emotional relationships over weeks via chatbot, then asks for money

1. Watermarking & Digital Signatures

- AI-generated content could be required to include invisible watermarks (text, image, or audio).

- OpenAI, Meta, and Google are developing watermarking standards.

- Makes it easier for platforms and law enforcement to detect AI-generated material.

- Email, social media, and chat apps must detect and block suspicious AI activity.

- AI tools can also be used to fight AI scams (e.g., identifying fake voices or scam scripts).

- Companies offering AI should flag or block abusive use of their models.

- Governments need to update laws to reflect AI capabilities (e.g., criminalizing deepfake fraud).

- AI developers may need to comply with “know your user” policies, like in banking.

- Require audit trails to trace where content came from

Teach people how to spot:

- AI-written scam messages

- Fake voices and deepfakes

- Too-good-to-be-true offers

- Digital literacy is now fraud prevention.

Group Responsibility

- Tech companies: Build guardrails into AI tools; monitor misuse

- Governments: Regulate use, prosecute crimes, fund cybercrime units

- Users (public): Stay informed; report suspicious activity

- Media & educators: Spread awareness about new AI scam tactics

“AI doesn’t scam people — people scam people using AI. The real battle is over how quickly society can catch up with its own tools.”

Top 5 AI-Powered Scams to Watch For (and How to Spot Them)

1. Voice Cloning Scams

What it is: Scammers use AI to mimic a loved one’s voice and claim they’re in trouble ("I need bail money!" or "I'm stranded overseas!").

Red flags:

- The call sounds urgent and emotional

- The voice sounds almost right but not quite

- They demand money immediately, often via gift cards or wire transfer

2. AI-Generated Phishing Emails

What it is: Scam emails that look shockingly legit — written with flawless grammar and personalization.

Red flags:

- Unexpected password reset links or bank notices

- Urgent tone (“Your account will be closed in 24 hours”)

- Sender’s email address is slightly off (e.g., support@amaz0n.co)

- What to do: Never click links. Go directly to the official website or app instead.

What it is: Chatbots pretending to fall in love with you — often on dating apps or social media — to eventually ask for money.

Red flags:

- Too-perfect conversation, fast emotional attachment

- Avoidance of video calls

- Sudden emergency (“I need money for a visa, a hospital bill…”)

- What to do: Reverse image search their profile photo. Don’t send money to someone you’ve never met.

What it is: AI writes job ads, recruiter messages, or fake grant emails promising big money.

Red flags:

- No interview, or a very short one

- Asked to pay for training, work permits, or equipment

- Too-good-to-be-true salary or perks

5. Deepfake Celebrity Endorsements

What it is: AI-generated videos of celebrities “promoting” scams — like crypto, investment schemes, or miracle cures.

Red flags:

- Poor lip-syncing or awkward eye movement

- Offers promising huge returns

- Video links on sketchy sites or social media

- What to do: Verify on the celebrity’s real account. Don’t trust viral videos without context.

- Enable 2FA (Two-Factor Authentication) on all accounts

- Use a password manager

- Don’t trust urgency — slow down and verify

- Report scams to your local cybercrime unit or online fraud service

Interview with the ChatGTP Series

|

|

|

1. A look at AI 1.0 : Jarvis here sir, What can I do for you master Stark today?

2. What is all the hype around AI? The Bullshit Meter Test

3. The Cons of the AI Tech. Or is this just another Content Hijacking by big tech

4. The AI Challenge - Can it Predict Horse Races

5. The Impact of AI on Media in 10 years. Or is it just Hype?

Monday, September 08, 2025

Gadget Blog

Martin Seto

It’s time to play devils advocate for this new tech is it a pipe dream or another content grab by big tech, like the internet. We all know Google profited from free content and not paying for it for their search engine.

There are have been legal challenges to the tech. The B.C. co-author of the “100 Mile Diet” has launched class-action lawsuits against four tech giants, including Meta and Nvidia, alleging they have used his copyrighted content to train their artificial intelligence software without compensating him or other authors. Another legal challenge was the SAG/Actra srtike in 2023 over the user of actor’s images in AI movie generating software.

You will need to manage your expectations with the tech if you are looking for a short cut answer. Here is a quote from the ChatGTP

“While ChatGPT offers fast, fluent answers, smart users treat it like a conversation with an expert intern — insightful, but always in need of fact-checking.”

“The model's prediction has a ±10% margin due to incomplete data from sensors. Consider recalibrating or providing more samples.”

ChatGPT Plus users can use tools like:

What It Means for You

ChatGPT said:

As I have discovered the AI tech needs to be trained ie: needs knowledge, make mistakes and learn.

This is reminiscent of my conversation with my brother who supervised an AI lab for Google a few years back. He said the algorithium needs to learn and gain experience as it makes mistakes as the error rate was not better than 20% like math statistical models of plus or minus 10% 19 out of 20 times as the benchmark.

This is reminiscent of my conversation with my brother who supervised an AI lab for Google a few years back. He said the algorithium needs to learn and gain experience as it makes mistakes as the error rate was not better than 20% like math statistical models of plus or minus 10% 19 out of 20 times as the benchmark.

The AI apps learn is by scraping content from the internet as part of their knowledge like Wikipedia plus others. In other words they are taking someone’s knowledge adding it to the app knowledge base without permission or licensing fee. This is another intellectual content grab by big tech to have a free product input model like the internet. After the industry got burned, it learned from their mistakes and have taken legal action to get them to pony up the cash for the content.

|

|

Source: https://www.cloudflare.com/learning/bots/what-is-content-scraping/

|

There are have been legal challenges to the tech. The B.C. co-author of the “100 Mile Diet” has launched class-action lawsuits against four tech giants, including Meta and Nvidia, alleging they have used his copyrighted content to train their artificial intelligence software without compensating him or other authors. Another legal challenge was the SAG/Actra srtike in 2023 over the user of actor’s images in AI movie generating software.

AI is being used to market the latest round of technology that are language processors is very intriguing. To label this software artificially intelligent is a stretch and this is very misleading to users on so many levels.

Same Big Tech Sales Pitch, New Product that will change the World

Same Big Tech Sales Pitch, New Product that will change the World

First off AI suggest it is smarter the humans, that is almost true as chess software beat a chestmaster in 1996, but the computing power came from IBM’s Big Blue supercomputer. Fast forward today it’s on your smartphone via the internet to a football field size datacenter. But to suggest at this stage of its development that (without historical data to back it up) this tech will increase human performance with more positive outcomes.

Same old myth from big tech as it is a very tantalizing product benefit for any brand and the industry has latch on to this again, just like previous attempts like Big Data, Virtual reality and earlier versions of AI, like chatbots used for customer service, that is aghh to use at best.

Same old myth from big tech as it is a very tantalizing product benefit for any brand and the industry has latch on to this again, just like previous attempts like Big Data, Virtual reality and earlier versions of AI, like chatbots used for customer service, that is aghh to use at best.

I test 3 AI Apps for a song/poem I wrote and asked for some suggestions, As I expected I got three different answers for each one. DeepSeek wanted to rewrite the lyrics without asking. Microsoft's CoPilot gave me a some chords to use for the song and the Chat box gave me a full composition with chords and lyrics. So each has their own limitations, I found Deep Seek annoying as I asked it not to rewrite the lyrics.

But anyways it is here to stay but I asked these questions of the AI for content accuracy. The app is very wordy but want I found was suprising as the error rate of the AI app ranged from 3-40% depeding on inquiry. It seems language processors still have not met the 20% error benchmark.

But anyways it is here to stay but I asked these questions of the AI for content accuracy. The app is very wordy but want I found was suprising as the error rate of the AI app ranged from 3-40% depeding on inquiry. It seems language processors still have not met the 20% error benchmark.

You will need to manage your expectations with the tech if you are looking for a short cut answer. Here is a quote from the ChatGTP

“While ChatGPT offers fast, fluent answers, smart users treat it like a conversation with an expert intern — insightful, but always in need of fact-checking.”

Interview with the ChatGPTApp

|

|

|

Keeping Tech Honest as it is not 100% right all the time

Q1. Math has an error rate of ±10%. How is AI going to deal with that?

Q2. How can you double-check the knowledge in the chat box?

Q3 I read about hallucinations. What are the incidences of this in the ChatBot GPT now?

Q4. Do you think in 10 years, there will be 0% incidence of hallucinations?

Q1. Math has an error rate of ±10%. How is AI going to deal with that?

ChatGPT said:

That's a deep and important question. If math — especially as applied in real-world data or engineering calculations — has an error margin of ±10%, then AI systems that rely on math must understand, adjust for, and communicate that uncertainty.

Let’s break it down:

What Does ±10% Error in Math Mean?

You're likely referring to:

- Measurement error (e.g., in physics, medicine, sensors)

- Estimation error (e.g., financial models, predictions)

- Rounding or numerical error in computation

- In most applied fields, ±10% means:

"The answer isn't exact. It's close — but within 10% above or below the real value."

How AI Will Handle ±10% Error Margins

1. Understand and Model Uncertainty

AI systems can:

- Use probabilistic models (Bayesian networks, confidence intervals)

- Estimate and express answers with confidence levels

- Recognize when input data is noisy or imprecise

Example: An AI medical diagnosis might say:

"There’s a 72% chance the tumor is benign based on current imaging, with a ±9% error margin due to scan quality."

2. Adjust Calculations for Tolerance

AI will be trained to:

- Accept tolerances in engineering and science (e.g., part must be within 0.5 mm)

- Simulate thousands of “what if” cases to test safety under variability

- Recognize unstable models where small math errors lead to big consequences

Example: In aerospace, AI will flag designs where a ±10% error in thrust could cause mission failure — and recommend safer alternatives.

3. Flag Results That Exceed Acceptable Risk

AI won’t always just give “an answer.” It can say:

“This result is uncertain beyond acceptable bounds.”

“A ±10% error here makes the decision risky — re-evaluate your input.”

This is already being done in financial risk models, self-driving systems, and climate modeling.

4. Explain the Error Clearly

One of ChatGPT’s future strengths is natural language explanation:

“The model's prediction has a ±10% margin due to incomplete data from sensors. Consider recalibrating or providing more samples.”

This helps non-experts understand uncertainty — something even many professionals struggle with today.

5. Learn to Minimize Error Over Time

With feedback loops, AI can:

- Continuously improve models to reduce the error margin

- Learn which data sources are unreliable and correct for them

- Use redundancy (multiple models or sensors) to triangulate better answers

But There Are Limits. Even expert AI won’t make uncertainty disappear. Instead, its role is to:

- Expose it clearly

- Quantify it with precision

- Act within safe bounds based on it

Bottom Line:

AI won't “solve” the ±10% error in math — it will learn to live with it, like a wise engineer or scientist. The power is in knowing when an error matters and when it doesn't — and communicating that clearly to humans.

Q2. How can you double-check the knowledge in the chat box?

ChatGPT said:

Excellent question — especially important when using AI like ChatGPT for research or writing. Here's how you can double-check the knowledge given in the chat box:

1. Ask for Sources

You can type something like:

“Can you cite your sources?”

“Can you cite your sources?”

“Where did you get that information from?”

While ChatGPT doesn't have live internet access on the free plan, it can often name books, studies, or datasets it's been trained on — and you can verify those manually.

2. Cross-Check with Trusted Sources

- Manually verify facts using:

- Wikipedia for general knowledge

- Google Scholar for academic sources

- Official websites (e.g., WHO, NASA, UN, .gov sites)

- Reputable news outlets (e.g., BBC, NYT, Reuters)

Tip: If ChatGPT gives a name, date, or stat, copy it into Google and look for real-world confirmation.

3. Use a Web-Connected AI Tool

ChatGPT Plus users can use tools like:

- Web browsing (via Bing): Fetches current data in real time

- Plugins or GPTs with web search or fact-checking functions

- Perplexity.ai or Claude: These also cite sources automatically

4. Check Internal Consistency

Ask follow-up questions like:

“Are you sure?”

“Are you sure?”

“Can you explain that in more detail?”

“What are counterarguments or opposing views?”

If the AI gives inconsistent answers, it may mean the original info was shaky.

5. Compare with Expert Opinion

Use tools like:

- YouTube interviews with professionals

- Podcasts or blogs by researchers or practitioners

- Books on the topic (check the author’s credentials)

Example: If ChatGPT tells you something about AI in medicine, compare it with what doctors or journals like The Lancet or NEJM say.

Pro Tip for Your Article:

“While ChatGPT offers fast, fluent answers, smart users treat it like a conversation with an expert intern — insightful, but always in need of fact-checking.”

Q3 I read about hallucinations. What are the incidences of this in the ChatBot GPT now?

ChatGPT said:

Here’s a well-rounded look at how often ChatGPT hallucinates, based on current research and reporting:

Measured Hallucination Rates

- GPT‑4 (and GPT‑4 Turbo) hallucinate around 3–10% of the time, depending on complexity and task.

- Vectara found ≈3% hallucination for GPT‑4 in summarization tests

- Financial Times +11

- How-To Geek +11

- Vocal +11

- A BytePlus analysis estimates GPT‑4 hallucinates 5–10% in more complex queries

- GPT‑3.5 has higher rates: about 15–20% (or even higher in some niches)

- GPT‑4o / o3 / o4‑mini showed worrying increases: hallucination rates from 33% to as high as 48% on certain benchmarks

GPT‑4.5, according to some community reports, decreased hallucinations from ~61% to ~37% — still significant but improved

Task & Domain Dependence

- Academic citations: A study of 150 references found GPT‑4 had a ≈20% hallucination rate in finance-related queries

- Systematic reviews: GPT‑4 hallucinated in 28.6% of cases, while GPT‑3.5 reached nearly 40%

- A Wired article highlighted GPT‑4’s ~3% hallucination in summarizing tasks

- A Financial Times piece reported GPT‑4 hallucinating ≈30% of the time in generating fake scientific references, and newer variants showing no improvement .

Why It Happens

LLMs like GPT act as probabilistic word predictors, not fact-checkers. They often guess specifics when unable to recall exact data .

For simple factual questions, GPT‑4 is reliable most of the time—but not perfect.

For technical, academic, or specialized domains, error rates can spike to 20–40%.

For creative tasks, hallucinations can be mistook for creativity—but still demand verification.

Best Practices to Mitigate Hallucination

- Always cross-check facts from the chat with trusted sources.

- Use multi-step verification: e.g., summarize → ask for sources → check them.

- Combine models (e.g., GPT + Claude + Gemini) to triangulate answers

- ask.library.arizona.edu+1

- TechRadar+1

When using for citations or legal/medical info, treat responses as drafts, not final.

Bottom Line:

Even in its most advanced form, ChatGPT still hallucinates with enough frequency (3–40%, depending on context) that human review remains essential.

Q4. Do you think in 10 years, there will be 0% incidence of hallucinations?

ChatGPT said:

That's a bold vision — and the short, honest answer is:

No — it's very unlikely that hallucinations will ever drop to 0%.

Here’s why, broken down clearly for your article or future use:

Why We’re Unlikely to Reach 0% Hallucination in 10 Years

1. LLMs Are Probabilistic, Not Fact-Based

Large language models (like GPT, Claude, Gemini) don’t “know” facts — they generate likely next words based on training data. Even when trained on billions of facts, they sometimes:

Miscombine information

Infer details that sound right but aren’t

Fill in blanks with confident-sounding guesses

Even newer architectures (like GPT-4o or Gemini 1.5) reduce hallucinations — but can’t eliminate them because they’re not built on verifiable logic trees, like a calculator.

2. Language Itself Is Ambiguous

- Human communication is full of:

- Idioms, irony, cultural context

- Contradictory information

- Incomplete knowledge

AI will always face edge cases and contextual ambiguity, especially when asked open-ended or novel questions.

3. Even Humans Make “Hallucinations”

- Doctors misdiagnose. Historians misattribute. Journalists sometimes misquote.

- So the more complex, open-ended, or creative a task is, the more likely even humans make confident but incorrect statements.

- Why would we expect AI to do better than our own brains, especially when mimicking us?

4. AI’s Strength Is Speed, Not Perfect Truth

AI can:

- Generate ideas

- Summarize, translate, rewrite

- Draft high-quality starting points

- But its role is increasingly clear:

- Draft, assist, and inspire — not to authoritatively decide or publish without review.

So What’s the Realistic Goal?

Not 0% — but instead:

Minimize hallucinations in critical domains (medicine, law, news)

Tag and explain sources clearly

Combine LLMs with retrieval engines (like ChatGPT’s "Browse" or “RAG” systems that pull live facts)

Make hallucinations detectable by design (via explainable AI or watermarking)

Quote for Your Article:

“We won’t reach 0% hallucination — but we’ll build systems smart enough to know when they might be wrong, and honest enough to say so.”

Interview with the ChatGTP Series

|

|

|

1. A look at AI 1.0 : Jarvis here sir, What can I do for you master Stark today?

2. What is all the hype around AI? The Bullshit Meter Test

3. The Cons of the AI Tech. Or is this just another Content Hijacking by big tech

4. The AI Challenge - Can it Predict Horse Races

5. The Impact of AI on Media in 10 years. Or is it just Hype?

Wednesday, July 16, 2025

Gadget Blog

Martin Seto

The promise of an AI assisted tool has been used in advertising in online buys that follow customer interactions from lead to customer to repeat customer. This was all controlled by a dashboard with human intervention. AI is also quite effective for cameras for example as there are a number of fixed inputs, in the real world that is not always possible.

But this generation is based on language models, not numbers like earlier models. So it was a big learning curve for me to wrap my head around this. My first impression is that it is the next generation level of web search hyped as AI, but now in a conversational tone using old data sometimes. I see the AI moniker now on my Google searches.

But this generation is based on language models, not numbers like earlier models. So it was a big learning curve for me to wrap my head around this. My first impression is that it is the next generation level of web search hyped as AI, but now in a conversational tone using old data sometimes. I see the AI moniker now on my Google searches.

|

|

|

Here is what I learned after chatting with the ChatGTB

The technology has mass market impact potential to improve human productivity on a scale maybe 3x more for starters and that is a wild guess till we learn more. Basically it is automating white collar jobs in the office, school, IT companies, creative, publishing, healthcare, retail, government, and legal. Now that is a pretty big promise even for a new high tech product who typically over promise and under deliver. But that is the job of these posts as we take a deep dive of the pros and cons of this new tech.

|

|

This image was created by ChatGTb and spot on for correctness

|

It is revolutioning medicine in a big way as the ChatGTB algorithm has been spun off in the medical field like an operating system on your computer like Apple, Android and Microsoft. The DaVinci robots are trained via videos and then are supervised by humans while doing gall bladder surgery on a pig. The robots were challenged to deal with unexpected scenarios and adapted which is true AI in my book. But the robot is still not as fast as a human doctor in the test (4 min to 5 min), so humans still rule today.

I always look at the latest technology as more hype than substance as I have seen so many vapour marketing initiatives by big tech (promising you their AI version is the next big thing), but is this for real or just hype. Of course I am gonna ask the tough questions.

Here is a quote generated by the ChatGPT and its rationale.

Here is a quote generated by the ChatGPT and its rationale.

“AI isn’t just the next big thing — it’s the next basic layer. The question isn’t whether it’s real, but who’s using it meaningfully.”

The Case against “Just a Fad”: AI Has Real Transformative Power

- AI Is Doing Real Work, Right Now

- Doctors use AI for cancer detection (e.g., Google’s DeepMind)

- Writers use GPT to draft, edit, translate content

- Coders use AI copilots to build real apps, saving hours daily

What was missing though was any mention of the competitors that includes Google’s Gemini, Meta’s AI and Deep Seek, but Microsoft’s Co-Pilot was mention that is an sharhelder of ChatGTB. This lead to believe that there may be some inherent bias in the algorithum like Deep Seek was accused of. The space is getting crowded and their is a lot of choice. I am in the process of creating a song using some lyrics I wrote to see if it is real and any good.

|

|

AI Apps are are everywhere now

|

We are entering the early adoption phase of this tech and I have been told it is powerful, intuitive and scary at the same time, but also has hallucinations (error rate) that we will dig deeper into later. My instincts tell me that this latest hype is driven by stock market plays just like fake meat and we know how that fizzled out. But we will soon know.

An Interview with the ChatGTB about AI - Fact or Fiction

I had a strange thought pop into my head based on the movie Galaxy Quest , where a TV series was thought of as reality by an alien race, can AI make the same mistake?

|

|

|

What is all the hype around AI

Q1. I’m doing an article on the ChatGPT engine and how it would impact the world. Give me some ideas.

Q2. I want a list of the type of ideas that's being used now.

Q3. How about the medical automated surgery?

Q4. Okay, let's be the skeptic here. Is AI just another tech fad like big data disguised?

Q1. I’m doing an article on the ChatGPT engine and how it would impact the world. Give me some ideas.

ChatGPT said:

Great topic, Martin! Writing about the ChatGPT engine and its global impact opens up a lot of fascinating angles. Here are some key ideas and directions you can explore in your article:

1. The Evolution of the ChatGPT Engine

- Brief history: Start with GPT-1 to GPT-4 (or GPT-4.5/o4) and beyond.

- Highlight leaps in capability, especially in reasoning, coding, image understanding, and natural conversation.

- How it works (simply): Touch on transformer models, training on massive datasets, and reinforcement learning from human feedback (RLHF).

2. Societal Impact

- Education: Personalized tutoring at scale (e.g., homework help, essay feedback, language learning).

- Access to expert-level instruction for remote or underserved communities.

- Workplace Transformation: Automating routine tasks (e.g., writing emails, reports, customer support).

- Enhancing productivity for creatives, marketers, programmers, etc.

- Job Displacement vs Creation: Explore which jobs might be replaced or redefined (copywriting, data entry).

- New roles in prompt engineering, AI oversight, ethics, and AI-enhanced creativity.

3. Economic & Global Development Impact

- AI as an economic multiplier: Helping small businesses scale and compete.

- Bridging language barriers: Multilingual translation and communication.

- Leveling the playing field: Low-cost access to expert knowledge and tools.

4. Ethical Considerations

- Bias and misinformation: Risks from hallucinations or biased training data.

- Privacy concerns: Handling user data responsibly.

- Dependency and critical thinking: Will people outsource thinking to AI?

5. Democratization of Knowledge and Creativity

- Anyone can write a novel, code an app, or start a business with AI support.

- Enables people without formal training to create high-quality content and ideas.

6. Future Implications

- AGI (Artificial General Intelligence): How close is GPT-style tech to AGI?

- AI governance: Calls for regulation, transparency, and open-source alternatives.

- Human-AI collaboration: Rather than replacement, AIs as co-pilots.

Possible Headline Ideas

- “The ChatGPT Engine: Humanity’s Smartest Tool Yet?”

- “ChatGPT and the AI Revolution: Promise, Peril, and Potential”

- “Talking to the Future: How ChatGPT is Changing the World”

Would you like help drafting a section or developing a particular angle (e.g., jobs, ethics, education, etc.)?

Q2. I want a list of the type of ideas that's being used now.

ChatGPT said:

Here’s a list of real-world applications where ChatGPT (and similar AI engines) are already being used today—across industries and roles:

I) Productivity & Workplace Tools

- Email drafting & summarizing – e.g., Microsoft Copilot in Outlook and Word

- Meeting transcription & summarization – e.g., Zoom AI Companion, Otter.ai

- Data analysis with natural language – ChatGPT with code interpreter (aka Advanced Data Analysis)

- Slide and report generation – Generating PowerPoints, proposals, or research summaries

- Internal knowledge base search – AI assistants answering employee queries from company documents

II) Customer Service & Chatbots

- Automated chat agents – ChatGPT used in websites, banks, and e-commerce for 24/7 support

- Help desk ticket triage – Classifying and routing support requests

- FAQ generation – AI writes helpful articles based on common user questions

III) Writing & Content Creation

- Blog post & article generation – Used by journalists, bloggers, marketers

- Marketing copywriting – Ads, landing pages, social media captions

- Scriptwriting – For YouTube, TikTok, and podcasts

- SEO optimization – Keyword-rich content suggestions

IV: Education & Tutoring

- Homework help and explanation – Math steps, essay feedback, concept clarifications

- Language learning – Practicing conversations, translations, vocabulary drills

- Lesson planning – Teachers using ChatGPT to generate quizzes, worksheets, slides

V) Programming & Software Development

- Code generation – Writing Python, JavaScript, HTML, etc.

- Debugging assistant – Explaining errors and fixing bugs

- Code documentation – Writing comments and README files

- Learning new frameworks/languages – Asking ChatGPT to explain code concepts

VI) Creative Industries

- AI-generated images – Using prompts with tools like DALL·E and Midjourney

- Story and character development – Writers using GPT to build plots or lore

- Game design – Writing quests, rules, or dialogue scripts

- Music lyric and melody assistance – Collaborating on song lyrics or ideas

VII) Healthcare (Careful/Experimental)

- Medical Q&A and triage tools – Helping patients understand symptoms

- Clinical documentation – Drafting medical notes and summaries

- Mental health support – Companion chatbots for basic emotional support (not a replacement for professionals)

VIII) Retail & E-commerce

- Product description generation – At scale, across thousands of items

- Customer recommendation engines – Personalized suggestions via chat

- Review summarization – Summarizing product reviews for easier browsing

VIIII) Government & Law

- Policy drafting support – Helping draft public policy or analyze legislation

- Legal research – Summarizing case law, finding precedents

- Plain-language rewriting – Making legal or bureaucratic documents easier to read

X) Personal Use

- Trip planning – Creating itineraries, booking suggestions

- Meal planning and recipes – Based on ingredients or diet

- Budgeting help – Analyzing spending patterns or suggesting savings tips

- Relationship or communication advice – Drafting tough messages or apologies

If you’d like, I can turn this into a table, infographic, or a written paragraph-style summary for your article. Want to explore any category in more detail?

Q3. How about the medical automated surgery?

ChatGPT said:

Great question — automated surgery using AI is a cutting-edge area, and while ChatGPT itself isn't used directly for surgery, the technology stack it's part of (AI, natural language processing, robotics, computer vision) is being applied in various parts of robot-assisted and semi-automated surgery. Here's how it fits into your article on ChatGPT and global AI impact:

AI in Medical Automated Surgery (and the Role of GPT-like Models)

What’s Happening Now

- AI is already being integrated into robotic surgical systems like:

- Da Vinci Surgical System – Assists with minimally invasive surgeries (controlled by humans).

- CMR Surgical’s Versius – Uses AI to guide tool movements and improve precision.

- Smart Tissue Autonomous Robot (STAR) – Demonstrated successful autonomous soft-tissue surgery (e.g., stitching intestines) in research settings.

How AI/GPT Engines Are Involved

While ChatGPT itself doesn’t perform surgeries, similar AI components and capabilities are increasingly part of:

Pre-surgical planning

- GPT-like models help summarize patient records or diagnostic data

- AI assists in predicting complications and proposing personalized plans

- Real-time guidance and decision support

- NLP systems interpret surgeon commands or medical records during operations

- Computer vision AIs track tissue types, blood vessels, and instrument positions

- Autonomous micro-tasks

- AI performs suturing, cutting, or laser procedures with minimal human input

- Real-time adjustments based on camera feedback (like self-driving cars but for tissue)

- Post-op reporting and documentation

- AI drafts surgical notes, summaries, and recommends follow-up care steps

- GPT-like tools summarize long surgery logs or sensor data for reporting

Benefits

- Precision and consistency beyond human capability in fine motor tasks

- Reduced human fatigue in long or complex procedures

- Access to surgery in remote or understaffed regions (via tele-surgery and automation)

Risks & Ethical Concerns

- Accountability: Who is responsible for errors — AI designer, hospital, or surgeon?

- Bias: AI systems trained on non-diverse data might perform worse on some patient groups

- Trust: Patients may be uneasy about a robot making life-or-death decisions

- Regulation lag: Technology is advancing faster than medical laws and standards

GPT-Like Future Possibility:

- Imagine a surgical AI assistant powered by ChatGPT that:

- Understands natural language ("increase pressure on the clamp" or "highlight vessel paths")

- Talks with the surgical team, explaining AI decisions in real-time

- Interfaces with diagnostics and medical records live during surgery

Example for Your Article:

"While GPT-powered engines like ChatGPT don't yet hold the scalpel, their language-processing capabilities are laying the groundwork for conversational AI interfaces in the operating room. Combined with robotics and computer vision, the dream of safe, semi-autonomous surgery is fast becoming a reality."

Let me know if you want this expanded into a full section or visual for your article!

Q4. Okay, let's be the skeptic here. Is AI just another tech fad like big data disguised?

ChatGPT said:

Excellent — let’s absolutely be the skeptic. That's how we separate hype from reality. So: Is AI just another overhyped tech fad — like "Big Data" in the 2010s?

Here’s a thoughtful, layered response you can use in your article (or frame as a debate section):

Is AI Just Another Tech Fad? (A Skeptic's Take)

- The Case for “Yes”: It's the New Big Data Buzzword

- Everyone’s Jumping on It

- Just like “cloud”, “mobile-first”, or “big data”, AI is slapped onto everything — from toothbrushes to marketing emails.

Vague Promises, No Clear ROI

Companies claim AI will revolutionize industries, but many have no measurable value to show. “AI-powered” is often just rule-based automation with a shiny label.

Hype Cycles Are Real

Gartner’s hype curve proves it: Most tech goes through inflated expectations, then hits a “trough of disillusionment.”

Overdependence on Buzz, Not Substance

Investors and media love a silver bullet. We’ve seen the same cycle with:

- The Metaverse

- Blockchain for everything

- IoT in toasters

- Big data dashboards that no one used

The Case against “Just a Fad”: AI Has Real Transformative Power

AI Is Doing Real Work, Right Now

- Doctors use AI for cancer detection (e.g., Google’s DeepMind)

- Writers use GPT to draft, edit, translate content

- Coders use AI copilots to build real apps, saving hours daily

Unlike Big Data, AI Is Accessible to Individuals

Big data mostly helped large enterprises. But GPT? A kid with a smartphone can now:

- Write a novel

- Build an app

- Learn quantum physics in plain English

It’s Creating New Categories of Interaction

AI isn't just analyzing data — it’s generating: Text, Code, Music, Images

and conversations.

That's a qualitative leap, not just faster Excel charts.

Permanent Integration into Tools

Unlike past fads, AI is being baked into mainstream platforms:

- Microsoft Office (Copilot)

- Adobe Creative Cloud

- Google Workspace

So What's the Balanced Answer?

- AI is not a fad — but its hype is faddish.

- Like the internet in the '90s, most of what’s said about AI today is inflated — but that doesn’t mean it’s fake.

- AI may outlast the hype because it changes how humans interact with knowledge, creativity, and work.

A Quote You Can Use:

“AI isn’t just the next big thing — it’s the next basic layer. The question isn’t whether it’s real, but who’s using it meaningfully.”

Would you like to format this as a debate box in your article?

I can also help you write a sidebar called “Fad or Foundation? AI vs. Big Data” with side-by-side comparisons.

Interview with the ChatGTP Series

Interview with the ChatGTP Series

|

|

|

1. A look at AI 1.0 : Jarvis here sir, What can I do for you master Stark today?

2. What is all the hype around AI? The Bullshit Meter Test

3. The Cons of the AI Tech. Or is this just another Content Hijacking by big tech

4. The AI Challenge - Can it Predict Horse Races

5. The Impact of AI on Media in 10 years. Or is it just Hype?

Monday, July 14, 2025

Gadget Blog

Martin Seto

It has been a while that I got excited about anything in the tech world, but AI has finally got my attention after reading how AI is being used in robotic surgery and was successful. So maybe AI is the real deal and another monumental change is coming in human productivity since the creation of the spreadsheet with this new tool. We all saw the fake famous people scams on IG and Facebook touting AI investing that a lot of people got sucked into so it works well. Criminals are part of the early innovators so we can learn from them.

Then I hear news reports in how Microsoft is laying off coders since the AI software is so good in writing code. What you can’t cut and past your code anymore? The automation of white collar jobs is now a reality, all the paper pushers need to reinvent themselves in the next 10 years. Change is life, Adapt, Pivot or Die.

The ChatGTP is spawning millions of users